Algorithms as Information Brokers: Visualizing Rhetorical Agency in Platform Activities

Online Search as Platform Activity

When students and scholars conduct searches using online library databases, they engage platforms from providers like Cengage, Clarivate Analytics, EBSCO, and ProQuest.1 While such library platforms are funded by subscriptions rather than advertising and may seem more “neutral” than commercial search engines (e.g., Google, Yahoo, and Bing; see Tewell for an inquiry into Google), the search tools within these platforms rely on similar digital algorithms, processes that automate the complex iterative problem of search. Within these platforms, algorithms function as information brokers that manage, control, and direct the content that platform users can search and access; in doing so, they exert rhetorical influence by determining what information matters and is available to researchers, and by providing that information across the many interfaces of the platform. This activity represents the persuasive influence of algorithms, itself an aspect of the rhetoric of platforms. Since platform algorithms are carefully guarded trade secrets, uncovering the extent of persuasive influence challenges traditional methods of identifying rhetorical activity.

Studying the persuasive influence of digital algorithms influences theories of rhetoric (Brock and Shepherd; Ingraham), challenges existing methods of rhetorical analysis (Beck), and rearticulates understandings of ethics (Brown). In all cases, digital algorithms pose problems for studying rhetorical agency, which Cheryl Geisler calls “a central object of rhetorical inquiry” (13). In promoting rhetorical code studies as a method for studying persuasive computer algorithms, Beck recognizes agency as encoded in the language acts of mathematicians and programmers. In describing procedural rhetoric as “the art of persuasion through rule-based representations and interactions… tied to the core affordances of the computer” (ix), Ian Bogost identifies agency in the programmed procedurality of computer-based algorithmic processes. And in seeking to understand “how software’s ethical programs are written and rewritten” (loc. 246), Brown locates agency in the programs that control access to and from other agents and assets in a networked system. Drawing on and extending such nuanced understandings of algorithmic agency, I advocate for a comprehensive approach to rhetorical agency as ecologically shared enactments—what Jenny Rice calls “a matter of complex aggregation” (Walsh et al. 436)—that emerge in online search, combining programmer encoding, computational procedures, algorithmic matches, and user activities. Yet, because accessing the highly profitable trade secrets of encoded language acts in algorithmic code remains untenable, the field of rhetorical studies would benefit from additional methods for identifying and studying rhetorical agency surrounding algorithm-centered functions in digital platforms.

This article speculates on methods available to rhetoricians to identify and study rhetorical agency that emerges, as Laurie Gries put it, “from the entangled associations among various things, human and otherwise, that constitute everyday life” (Walsh et al. 438) during online search on digital platforms. It considers digital algorithms as rhetorical, and it identifies the rhetoric emerging around algorithmic activity as an aspect of what Tarleton Gillespie calls the politics of the platform. This article describes the influence of algorithms and users on one another in the research platform, represents this influence as rhetorical, and visualizes this shared rhetorical activity. Using the example of a student conducting online research in a library database as a heuristic, the article suggests methods for measuring and describing shared rhetorical agency.

Sharing Rhetorical Agency in Online Research

Conducting online search puts users in mediated relation with digital algorithms. At first glance, the activities emerging from these relations seem easily sorted into user- or algorithm-controlled processes: users enter search terms, algorithms make matches and return results, and users select results. Yet too simple an approach ignores the agency that algorithmic processes enact. The activity of crawling websites, of choosing which websites to crawl, and of determining which data and metadata to index falls squarely in the realm of algorithmic agency. Similarly, the work of matching user-entered keywords to procedurally determined indexed data seems an entirely algorithm-based rhetorical activity. And while users may select best matches from among sorted results on a search engine results page (SERP), relevance sorting is generated by the algorithmic processes that identify matches and results.

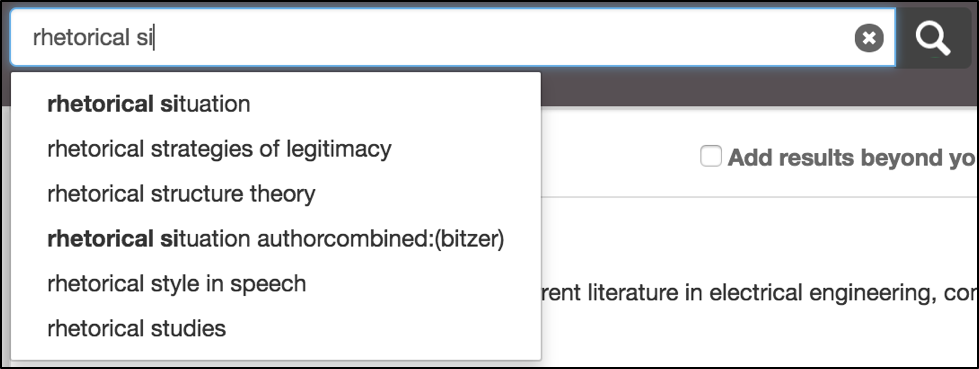

Even this nuanced depiction of agency does not adequately address the complexity of relations among users and algorithms in search platforms. Users are influenced by prior experiences with search platforms to construct terms that are best “understood” by algorithmic processes. Auto-completion suggestions (depicted in Fig. 1) that appear in a search field help users hone their vocabulary to match prior successful searches.

Predictive auto-completion suggestions are shaped in part by the user’s prior activities on that browser and search platform as collected in user profiles (if logged into an account) and cookies, files used to store user-generated information for reference by the search platform’s procedures. Even during the relatively stable input process, which seems largely controlled and managed by users, the influence of user activity and algorithmic activity on the input blurs. In rhetorical terms, agency on a search platform is shared and emerges dynamically as “a way of acting” (Cooper 373) among users and algorithmic processes. Agency may be better depicted on a shifting continuum between users and algorithmic processes than as swinging between user-directed or algorithm-directed influence. Understanding such shared agency requires an approach to rhetorical agency that takes into account the combined activities of human and nonhuman entities.

Visualizing Rhetorical Agency in Online Research

Algorithms and users collaborate, akin to Marilyn Cooper’s ecological model, to initiate, manage, and control the flow of data across platforms. This data is managed by algorithmic processes put in place by developers, programmers, and mathematicians across managed platforms, and it is used by advertisers, researchers, hackers, and citizens alike. Agency in such networked environments is difficult, but not impossible, to trace as it emerges along the continuum of user and algorithm.2

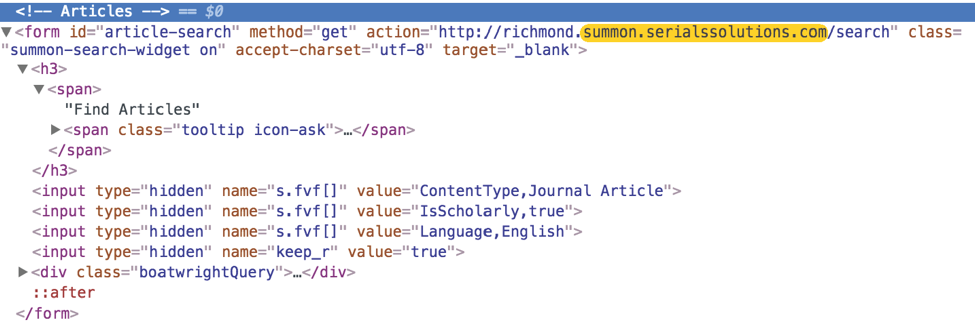

To illustrate the challenges of tracing agency as it emerges dynamically during online research, consider this common scenario. Students are asked to synthesize a position from academic sources of their choosing. They conduct research on personal laptops connected to the campus network and start research with an academic database from the campus library’s subscriptions. Students visit the library webpage, select the “Articles” tab to access the main search window, and enter search terms appropriate to the chosen topic (see Fig. 2).

Researchers engaged the Summon® Service platform by ProQuest the moment they loaded the search page. They may not know they engaged a platform, but inspecting the code on the search page (see Fig. 3, highlighted) reveals that the platform is summon.serialsolutions.com and that the search will produce results that match their search terms from among scholarly journal articles in English.

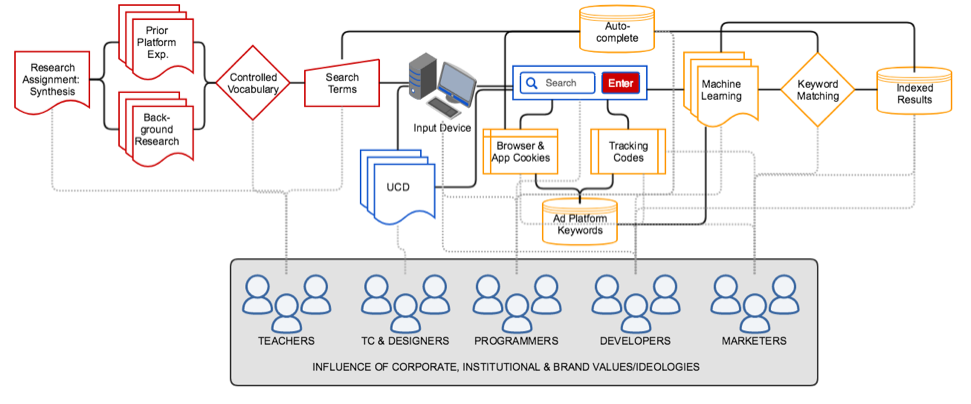

In what follows, I isolate the activity of searching for analysis. Careful scrutiny of this activity unpacks the platform, algorithm, environmental, and human conditions that coalesce in assemblage activity to communicate the search term(s) to the algorithm for matching to indexed data. Such critical attention helps identify the methods required to trace assemblage agency. Fig. 4 represents the results of this critical scrutiny, a flowchart of assemblage platform activity enacted in the moment of entering search terms.

In terms of the research scenario described earlier, the students’ prior experiences with search on the platform, instruction and practice in information literacy, background research and knowledge, and familiarity with topical keywords represent researcher contributions and activities (depicted in red). These aspects of the students’ contributions are heavily influenced by teams and individuals teaching information literacy and search skills, programming auto-completion suggestions, and designing the search interface and SERP. In addition to embedding their own team and individual values into their work, these groups are themselves heavily influenced by the corporate, institutional, and brand values and ideologies for which they labor (represented by the gray box). Similar tracings of influence can be revealed in the user-centered design elements of input device and interface (depicted in blue in the center of the figure) and in the algorithm-centered activities engaged during the search process (depicted in orange). The search box and the “enter” button are aspects of a platform interface. Tracing the agency of that interface requires methods that address human and nonhuman entities, that measure and trace algorithm, platform, interface, and human activities coalescing and emerging in real time during the search process.

This emergent assemblage activity is rhetorical agency—a product of human and nonhuman entities working alongside each other in complex platforms. While posthuman and new materialist theories provide a methodological framework for understanding such agency, the specific methods used to trace that agency are not likely found among traditional rhetorical methods. Shifting to an emergent assemblage-focused inquiry requires methods that can either capture or provide evidence of assemblage activity at work in the often-hidden platform interactions that persuade users, human and nonhuman alike, toward specific activities.4

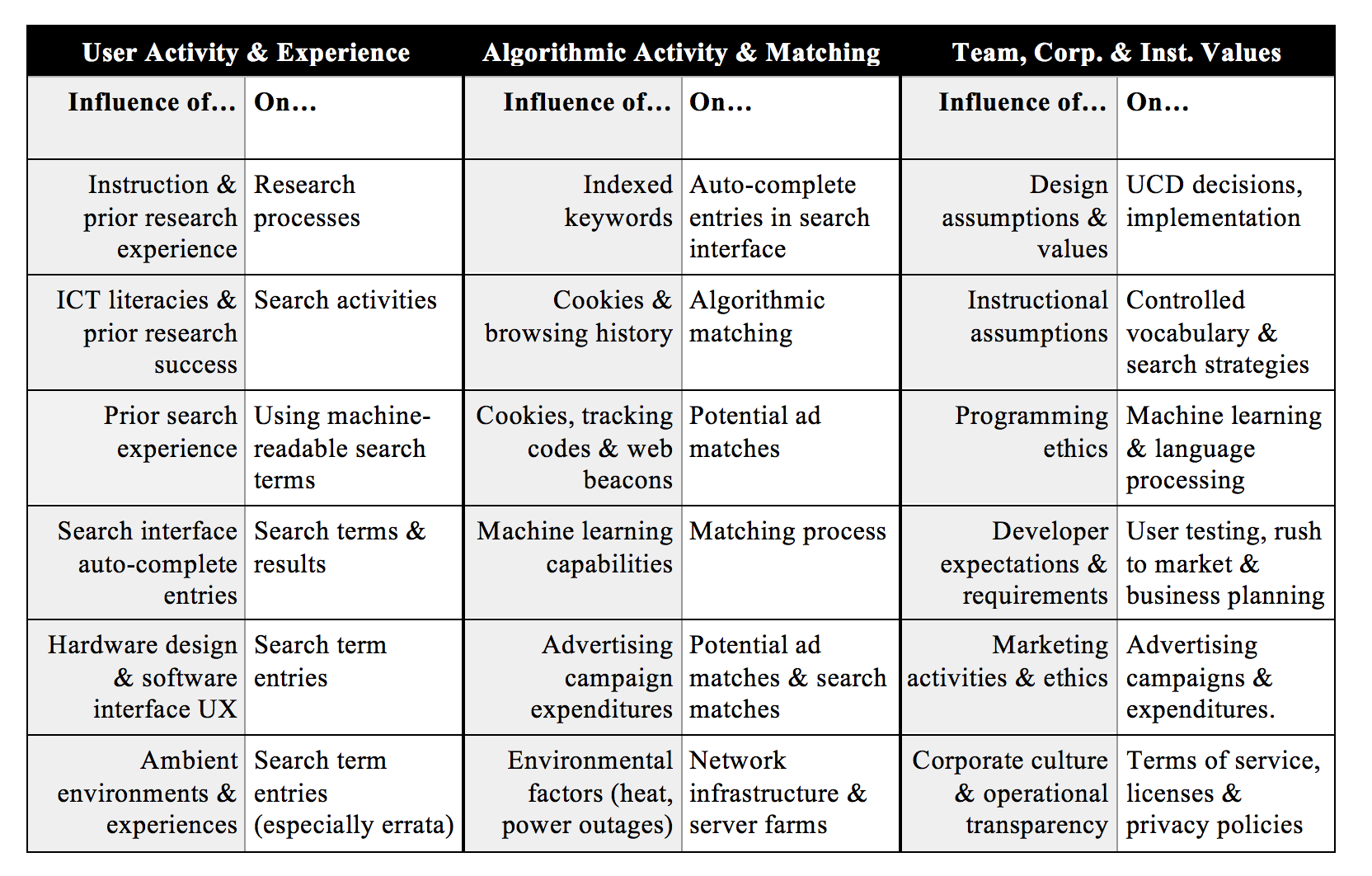

In order to trace the assemblage rhetorical activity in the research process, researchers need to collect, measure, and report on influences affecting 1) user activity and experience; 2) algorithmic activity and matching; and 3) team, corporate, and institutional values. For each of these areas of influence on particular activities in the research process, researchers can identify and collect data that can be combined, analyzed, and coded toward describing the origins and directions of assemblage agency. Table 1 proposes these influences that can be collected and measured toward depicting assemblage activity.

For example, in addressing the influence of user activity and experience (as seen in the first column in Table 1), researchers might ask students to describe their prior experiences with search engines. These results could help researchers to estimate ways prior experience influences students’ selection of search terms predicted likely to be machine readable. Identifying such data points that rhetoricians may collect and measure provides only the raw materials that can be used to trace persuasive activities of assemblage agency.

Placing these data points in relation to one another while tracing the flow of agency across assemblage entities is the goal of this approach to accounting for rhetorical agency. Such an approach requires an associative framework. User experience (UX) testing provides a chronological timeline that enables researchers to attach online research activities to timestamps. As activity emerges through assemblage activity, it may be related chronologically to concurrent, preceding, and succeeding activities. For example, as the researcher begins to enter search terms, UX testing provides timestamped, on-screen evidence of real-time autocomplete suggestions offered by the search engine’s algorithmic activity (see the example in Fig. 1). Preceding, concurrent, and succeeding network activity collected by inspecting HTTP Archive (HAR) files[4] from the browsing session may be overlaid on the user’s activities using timestamps to visualize the way agency rapidly shifts across the search interface to and from the user and the algorithm. Additional contextual data points from pre-search research literacy narratives and post-search survey questionnaires may be mapped onto the timeline to explain the researcher’s specific search term choices, search result selections, and research methods. Placing these data points in relation to one another would begin to visualize the way agency emerges through time in assemblage online activity.

Overlaying a theoretical approach on the chronological framework of UX testing represents an important closing step to this speculative exercise. I draw on Jane Bennett’s vital materiality to further comment on emergent aspects of algorithmic agency. Bennett defines vitality as “the capacity of things—edibles, commodities, storms, metals—not only to impede or block the will and designs of humans but also to act as quasi agents or forces with trajectories, propensities, or tendencies of their own” (loc. 62). Identifying vital materiality in the online search process requires capturing the attitudes and activities of material aspects of search. Such material aspects include ambient temperature and noise of the search environment; material aspects of the user interface, including keyboard, mouse, trackpad, and monitor; network infrastructure engaged through online search process and environmental conditions surrounding that infrastructure; and corporate and governmental facilities through which network access is managed, monitored, surveilled, and administered. Isolating, identifying, measuring, and describing these material conditions and situations—as messy and imperfect such an approach may be—can contribute discrete data points as specified in Table 1. These data points can, in turn, be mapped to timestamps from UX tests to determine logical relations among assemblage entities contributing to the emergent agency of online research. For example, network speeds measured during a research session can be mapped to specific moments in the UX test timestamp to discover if network lag, throttling, or traffic might influence the research activity, from entering search terms to awaiting search results, happening at that moment. While network conditions are not directly engaged in the research process, those conditions nevertheless represent “forces with trajectories… of their own” (Bennett loc. 62) that contribute to the shared agency assembling around online research. Network conditions are among many possible forces that affect assemblage agency emerging around algorithmic processes; mapping these material conditions to a timestamp of research activity contributes toward understanding the effects of materiality on agency.

Conclusion

This article has sought to speculate on a methodological approach that may be employed to measure and trace assemblage agency that emerges through algorithmic processes. To do so, it has represented rhetorical agency as activities that influence the actions and responses of human and non-human entities involved in online research. It has visualized the assemblage agency that emerges around the process of entering data into an online search engine during the research process and has sought to identify aspects of user experience and activity; algorithmic activity and matching; and team, corporate, and institutional values that can be collected and measured toward more accurately depicting rhetorical agency as post-human assemblage-based rather than either user-generated or technologically mediated. It has closed with a brief example of ways vital materiality can be identified and mapped on user experience timelines to identify ways agency emerges and dissolves across assemblage entities. This represents a fresh way of understanding rhetorical agency as built through post-human assemblages of human and non-human entities engaged in collective efforts.

Endnotes

- Well known platforms from these providers include EBSCOHost, Web of Science (Clarivate Analytics), Gale (Cengage), and ProQuest. return

- Several theorists offer methods to address collective human and nonhuman agency. Bruno Latour’s actor-network-theory (ANT) offers approaches for tracing social relations among symmetric actants. Paul Prior and others offer cultural-historical activity theory as an approach for analyzing the socio-cultural and ideological contexts in which corporations and publics function. New materialist and post-human approaches like Jane Bennett’s vibrant matter, Jim Brown’s ethical programs, and Levi Bryant’s onto-cartography contribute useful perspectives. Each points toward agency as an emergent activity of collectives consisting of human and nonhuman entities in active relation with one another. return

- The University of Richmond’s search interface is used as a sample, but many other academic libraries follow similar patterns. return

- See www.softwareishard.com/blog/har-12-spec for technical specs on the HAR file protocol. return

Works Cited

- Beck, Estee. N. “A Theory of Persuasive Computer Algorithms for Rhetorical Code Studies.” Enculturation, no. 23, 22 Nov. 2016. enculturation.net/a-theory-of-persuasive-computer-algorithms. Accessed 12 June 2017.

- Bennett, Jane. Vibrant Matter: A Political Ecology of Things, Duke UP, 2010.

- Bogost, Ian. Persuasive Games: The Expressive Power of Videogames. MIT P, 2010.

- Brock, Kevin, and Dawn Shepherd. “Understanding How Algorithms Work Persuasively through the Procedural Enthymeme.” Computers and Composition, vol. 42, 2016, pp. 17-27, doi: 10.1016/j.compcom.2016.08.007.

- Brown, James J. Ethical Programs: Hospitality and the Rhetorics of Software. U of Michigan P, 2015. doi: 10.3398/dh.13474172.0001.001.

- Bryant, Levi R. Onto-Cartography: An Ontology of Machines and Media. Edinburgh UP, 2014.

- Cooper, Marilyn M. “The Ecology of Writing.” College English, vol. 48, no. 4, 2-11, pp. 364-375.

- Geisler, Cheryl. “How Ought We to Understand the Concept of Rhetorical Agency? Report from the ARS.” Rhetoric Society Quarterly, vol. 34, no. 3, 2004, pp. 9-17, doi: 10.1080/02773940409391286.

- Gillespie, Tarleton. “The Politics of ‘Platforms.’” New Media & Society, vol. 12, no. 3, May 2010, pp. 347-364.

- Ingraham, Christopher. “Toward an Algorithmic Rhetoric.” Digital Rhetoric and Global Literacies: Communication Modes and Digital Practices in the Networked World. Edited by Gustav Verhulsdonck and Marohang Limbu, IGI Global, 2014, pp. 62-79.

- Latour, Bruno. Reassembling the Social: An Introduction to Actor-Network-Theory, Oxford UP, 2005.

- Miller, Eric J., & Rice, A. Kenneth. Systems of Organization: The Control of Task and Sentient Boundaries, Routledge, 1967.

- Prior, Paul, et al. “Re-situating and Re-mediating the Canons: A Cultural-Historical Remapping of Rhetorical Activity.” Kairos, vol. 11, no. 3, 2007. Accessed 19 June 2017 from kairos.technorhetoric.net/11.3/binder.html?topoi/prior-et-al/.

- Tewell, Eamon. “Toward the Resistant Reading of Information: Google, Resistant Spectatorship, and Critical Information Literacy.” Portal: Libraries and the Academy, vol. 16, no. 2, Apr. 2016, pp. 289-310.

- Walsh, Lynda, et al. “Forum: Bruno Latour on Rhetoric.” Rhetoric Society Quarterly, vol. 47, no. 5, Oct. 2017, pp. 403-462, doi: 10.1080/02773945.2017.1369822.

Cover Image Credit:

Daniel L. Hocutt is the Web Manager on the Marketing & Communications team at the University of Richmond School of Professional & Continuing Studies, where he also serves as Adjunct Professor of Liberal Arts teaching undergraduate classes in research and composition. He is a doctoral candidate in English at Old Dominion University with a focus on rhetoric and technical communication. His research interests include material rhetorics and rhetorical agency where they intersect in algorithms, machine-learned, and artificial intelligence. He promotes algorithmic literacy as a professional technical communicator.

Daniel L. Hocutt is the Web Manager on the Marketing & Communications team at the University of Richmond School of Professional & Continuing Studies, where he also serves as Adjunct Professor of Liberal Arts teaching undergraduate classes in research and composition. He is a doctoral candidate in English at Old Dominion University with a focus on rhetoric and technical communication. His research interests include material rhetorics and rhetorical agency where they intersect in algorithms, machine-learned, and artificial intelligence. He promotes algorithmic literacy as a professional technical communicator.