Constructing Research, Constructing the Platform: Algorithms and the Rhetoricity of Social Media Research

Picture a researcher watching the first 2016 presidential debate. He notices that CNN has listed the hashtag #Debates2016 in the corner of the screen and wonders how many people are using this hashtag and what kind of interactions might result from its use. Or, imagine another researcher reading about the recent Women’s March protests. She asks herself how so many protesters coordinated across such wide geographic boundaries and what specific texts helped mobilize marchers. Where might these scholars begin to answer such questions? For many, the answer is self-evident: social media.

Social media platforms are an increasingly significant site for rhetorical action, and researchers have in turn embraced social media as both an object of study as well as a methodological tool. Working within the confines of the platforms they study, scholars take advantage of each space’s unique affordances and constraints to locate participants and texts. Recent studies, for example, have examined social media’s impact on literacy practices and identity (Babb; Buck), the role of Twitter hashtags in publics and protest (Hayes; McVey and Wood; Penney and Dadas), and YouTube’s potential to facilitate public debate (Jackson and Wallin; McCosker). In these studies and others, social media platforms function as key research tools.

Like any digital technology, however, these platforms are not neutral and are constructed in sometimes-invisible ways to produce the materials researchers have access to as well as their encounters with those materials. Social media platforms offer robust search functions and access to potentially unprecedented amounts of data, and while writing studies scholars have been eager to explore how the rhetorical nature of social media platforms may affect users, they too often have overlooked how these platforms inform their own researcherly interactions. In this article, I argue for a more deliberate methodological consideration of the rhetoricity of social media platforms. More specifically, I suggest that foregrounding the diverse networks of actors—including nonhuman ones—that mediate and create social media spaces equips researchers to account for how the platform constructs our research, and, likewise, how our research practices construct the platform.

Recognizing how the social media spaces we study are assembled builds on long-held assumptions about the importance of enacting reflexive research and enables digital rhetoric researchers to engage more critically—more rhetorically—with the social media platforms we study. To illustrate, I will examine how one particularly ubiquitous set of actors, algorithms, can shape research practices and results on popular social media platforms Twitter and Facebook. Researchers often rely on algorithmically constructed content—both in the form of “chatbots,” algorithmically automated users that are programmed to create content, as well as data that is a product of our own interactions with the platform—to make claims about the nature of social media. Ignoring algorithms, however, risks ignoring key rhetorical functions of social media platforms. To close, then, I’ll offer heuristic questions to assist researchers in enacting reflective research practices that account for the rhetoricity of social media platforms.

Researching Social Media

Writing studies scholars have long recognized that digital writing technologies are not neutral tools: rather, they are “constructed […] with a range of ideologies, differences, and politics at play” (Kimme Hea 274). As early as 1994, for example, Cynthia and Richard Selfe showed how seemingly transparent computer interfaces in fact reproduce class-based power structures. This scholarship—and much more (Arola; Eyman; Selber; Selfe)—speaks to writing studies’ interest in understanding how digital writing technologies like social media platforms are above all rhetorical.

These arguments have also informed conversations about digital research methods within writing studies. Scholars have argued for a rhetorical approach to digital writing research that acknowledges how digital technologies shape the research process, particularly in terms of researcher identity and positionality (Almjeld and Blair; McKee and Porter; Rickly). Most of this scholarship, however, is focused on how digital technologies change researchers’ interactions with human participants. In her study of Facebook and YouTube users’ arguments about marriage equality, for example, Caroline Dadas thoughtfully interrogates her own identity in relationship to her participants, noting how this process can be complicated in digital spaces. Filipp Sapienza similarly describes how his own identity was implicated in a study of an online community, arguing that researchers adopt a rhetorical “flexible positionality” with participants in digital settings (91). Many of these arguments echo feminist researchers’ demands to more closely examine researcher positionality (Deutsch; Kirsch and Ritchie; Kleinsasser). Gesa Kirsch argues that one of the most notable features of feminist research is its commitment to “analyze how the researchers’ identity, experience, training, and theoretical framework shape the research agenda, data analysis, and findings” (5), and contends that researchers “must strive to establish collaborative research relations that include the diverse constituencies that are always party to the literacy events we study” (89). Taken together, this scholarship demonstrates that the field has long recognized research (online and off) as a reciprocal process that results from the relationships that researchers form with the multiple actors that constitute any research scene.

However, writing studies scholars thus far have struggled to account for the abundant nonhuman actors that also participate in our research. Social media platforms demonstrate plainly how technologies, material conditions, cultural values, and the researcher herself all work to create not a transparent, static text but a dynamic rhetorical ecology. Acknowledging the presence of nonhuman research participants—specifically, algorithms—thus extends existing ideas of positionality, reflexivity, and the rhetoricity of research, highlighting how researchers work alongside nonhuman agents to create the digital spaces we study. Simply put, researchers do not just passively use social media platforms: we actively produce them. If, as Janine Solberg argues, we need scholarship that “considers the structures of the digital tools themselves, and whose practices, values, and investments they represent” (56), then it is important to consider who or what creates social media platforms, how they do it, and to what end—and how that may ultimately shape our research processes and findings.

Algorithms and the Creation of Social Media Platforms

While there are many participants responsible for the creation of any digital research context, I turn to algorithms in particular because they so clearly illustrate how researchers work in tandem with nonhuman actors to construct social media platforms. Algorithms are “encoded procedures for transforming input data into a desired output” (Gillespie)—basically, code that automates tasks—and they are essential to any social media platform. As Estee Beck argues, algorithms function as “quasi-rhetorical agents” because “of their performative nature and the values and beliefs embedded and encoded in their structures” (“A Theory”). Users (including researchers) generate information that algorithms can then use to access and deliver content, such as search results, advertisements, or specific posts. In this way, algorithms not only construct social media platforms, but they also serve as a stark example of how researcher identity shapes any (digital) research project.

One important manifestation of algorithms in social media spaces is “chatbots,” algorithmically automated users that are programmed to create all kinds of content. Bot accounts are most prevalent on Twitter but can exist on any social media platform, and “can be deployed by just about anyone with preliminary coding knowledge” (Guilebeault and Woolley). These bots can perform a wide range of tasks from the benign to the more pernicious. While chatbots can share news (“Big Cases Bot”), handle customer queries for companies (Cairns), or even contact elected officials (“Resistbot”), they have also been shown to spread misinformation and shape international politics (Ferrara et al.). Some bots are easy to spot, identifying themselves as such either in their usernames or in their user bios. Not all bot accounts are so recognizable, however, and they are becoming increasingly influential on social media platforms, accounting for an estimated 15% of Twitter users (Varol et al.).

The 2016 U.S. presidential election made evident exactly how instrumental social media bots can be, and raises questions about their role in the future. Twitter bots especially generated a massive number of texts: between the first and second debates, for example, researchers found that “more than a third of pro-Trump tweets and nearly a fifth of pro-Clinton tweets” were from bots, which amounted to “more than 1 million tweets in total” (Guilebeault and Woolley). A researcher collecting tweets for an article on digital responses to the debate, then, will likely—and potentially unknowingly—encounter some of these bot-generated tweets. Social media bots challenge our conceptions of research participants, forcing digital rhetoric scholars to confront the implications of nonhuman authorship.1 How might we distinguish between human-generated and bot-generated content on social media? Are there meaningful rhetorical differences between human and bot accounts? How do chatbots change the rhetorical functions of social media platforms, or our understandings of rhetoric itself? These are complex questions, but they will only grow more pressing as bots continue to populate social media platforms. While researchers can consult tools such as Indiana University’s Botometer to help identify bots, the question may become moot as bots grow increasingly sophisticated. Regardless of how rhetoricians theorize chatbots, it’s important for researchers to acknowledge that these automated users produce an ever-larger number of social media texts and are thus an increasingly integral element of social media research.

Social media algorithms aren’t just creating content, however: they’re also collecting information from users, including researchers, and using that data for a number of purposes, including advertising, internal analytics, personalization, and more. Perhaps most relevant to researchers, though, is how data collection tools influence how users locate and access texts on social media platforms. Social media platforms “mediate how information is accessed with personalisation and filtering algorithms” and are dependent on the data users provide, explicitly or otherwise (Mittelstadt et al. 1). Data collection algorithms thus highlight how integral researcher identity and positionality are to digital research.

Twitter’s privacy policy, for instance, notes that it collects information from users, in part to tailor the platform: “We receive information when you interact with our Services […] We use Log Data to make inferences, like what topics you may be interested in, and to customize the content we show you” (“Twitter Privacy Policy”). Similarly, Facebook explains that the information it gathers from users enables them “to deliver our Services, personalize content, and make suggestions for you” (“Data Policy”). Both these platforms use algorithms to keep track of who you interact with, what links you click on, your physical location, and more in order to make decisions about what content to deliver to you. Tarleton Gillespie describes this process as the creation of “calculated publics,” in which algorithms “help structure the publics that can emerge using digital technology” (188). Social media algorithms create specific publics, Gillespie argues, through personalized search results and custom content, thereby shaping public discourse and processes of knowledge production.

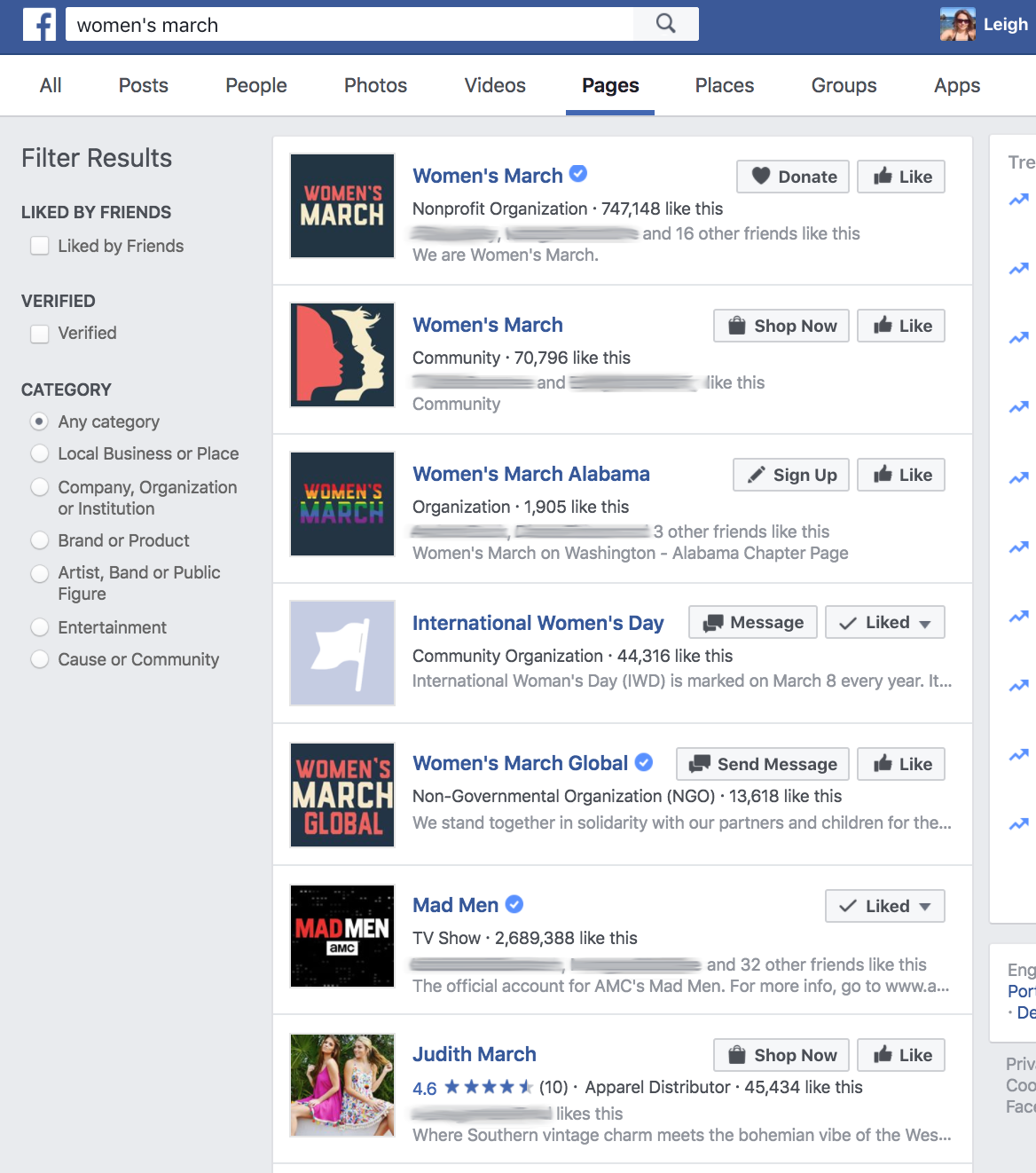

It’s easy to see how algorithmically generated “calculated publics” may impact the work of researchers who rely on social media platforms. For example, when I used my Facebook account to search for “women’s march,” I received a number of results, all filtered through my account data. I was directed to posts by my friends, pages my friends liked, nearby events, and even local (ostensibly unrelated) businesses. It wasn’t clear how any of these posts were ordered in the search results, as they varied in terms of comments/reactions, time posted, and location. Facebook presumably drew on my data—and the data of other users algorithms have determined to be like me— to generate the search results it decided best met my needs. Hence, any researcher investigating how Women’s March participants used Facebook to organize and coordinate protests worldwide would have to do it from the position (and resulting research lens) she’s created on Facebook. Indeed, searching Facebook is difficult without an account. Users are unable to search through the Facebook interface without logging in and instead must provide identity markers—through the data users supply in a profile and the data that Facebook collects from an account—to filter any search results or content. In other words, researchers must claim (some kind of) identity in order to use the Facebook platform, and that identity directly affects how the researcher uses the space as well as the content it provides.

Alongside the algorithms that mediate our use of social media platforms, then, researchers construct what Beck calls “an invisible digital identity,” which has the “[profound] potential to shape what users see online and use in their work” (“The Invisible,” 128). In other words, our identities are co-created with social media algorithms, and those identities in turn create the research scenes we study. Because our identities inevitably inform how and what we research on social media, it is therefore essential that we recognize how data collection technologies can amplify, not erase, the significance of our own positionalities.

Toward Reflexive Research

Social media platforms—like any research scene—are the rhetorical products of a diverse assemblage of actors. Algorithms are but one set of actors in the construction of these platforms, but they are crucial ones that deserve close scrutiny. Below, I’ve listed some basic questions and procedures that can help researchers to identify, as much as possible, how algorithms and other actors construct social media platforms and how they may influence research findings:

- What data is being collected, by whom, and for what purposes? Make sure you review the privacy or data policies for specific platforms. Free programs like Ghosterly can also help by listing any trackers on websites you visit.

- Can you opt out of data collection? For example, Twitter allows users to do so under “personalization and data settings.”

- Are you triangulating your research by collecting data at different times, and from different computers and accounts? Doing so may draw your attention to any discrepancies that may impact your conclusions.

- What kind of identity have you created for yourself on the social media platforms you are researching? How is that identity reflected back in your results? Scrutinize the relationship between your positionality and the platform(s) you’re using.

- How might you account for the possibility of bot accounts on the platforms you’re studying?

- Will you attempt to identify bots, either through tools like Botometer, or through heuristics like this one?2 Look for ways their presence may shape your findings.

While the steps above can help researchers recognize how nonhuman agents work alongside us to produce social media platforms, the nature of social media is such that we will always rely on algorithms. These algorithms construct the spaces we study, and they respond to the sometimes-overlapping positions we construct as researchers and users of social media platforms. Accordingly, researchers must be mindful of the identities they create on social media, being sure to consider the ethics of these platforms as both user and researcher. Social media platforms are powerful research tools, but they are above all rhetorical, and therefore deserve our continued methodological attention. Acknowledging how social media platforms are constructed—and by whom, or what—enables researchers to enact reflective research practices that recognize the complex rhetoricity of digital technologies.

Endnotes

- See Krista Kennedy’s book Textual Curation for more discussion about the significance of bots and nonhuman authorship. return

- Ferrara et al. provide a useful overview of competing approaches to social media bot identification. return

Works Cited

- Almjeld, Jen, and Kristine Blair. “Multimodal Methods for Multimodal Literacies: Establishing a Technofeminist Research Identity.” Composing (media)= composing (embodiment): Bodies, Technologies, Writing, the Teaching of Writing, edited by Kristin L. Arola and Anne Frances Wysocki. Utah State UP. 2012, pp. 97-109.

- Arola, Kristin L. “The Design of Web 2.0.” Computers and Composition, vol. 27, no. 1, 2010, pp. 4-14.

- Babb, Jacob. “Writing in the Moment: Social Media, Digital Identity, and Networked Publics.” Harlot, vol. 5, 2016, http://harlotofthearts.org/index.php/harlot/article/view/327/190. Accessed 17 Nov 2017.

- Beck, Estee. “A Theory of Persuasive Computer Algorithms for Rhetorical Code Studies.” Enculturation, vol. 23, 2016, http://enculturation.net/a-theory-of-persuasive-computer-algorithms. Accessed 5 June 2017.

- Beck, Estee. “The Invisible Digital Identity: Assemblages in Digital Networks.” Computers and Composition, vol. 35, 2015, pp. 125-140.

- “Big Cases Bot.” Twitter, https://twitter.com/big_cases. Accessed 9 Jan 2018.

- Buck, Amber. “Examining Digital Literacy Practices on Social Network Sites.” Research in the Teaching of English, vol. 47, no. 1, 2012, pp. 9-38.

- Cairns, Ian. “Speed Up Customer Service with Quick Replies & Welcome Messages.” Twitter, 1 Nov 2016, https://blog.twitter.com/marketing/en_us/topics/product-news/2016/speed-up-customer-service-with-quick-replies-welcome-messages.html. Accessed 9 Jan 2018.

- Dadas, Caroline. “Messy Methods: Queer Methodological Approaches to Researching Social Media.” Computers and Composition, vol. 40, 2016, pp. 60-72.

- “Data Policy.” Facebook, 29 Sept. 2016, https://www.facebook.com/privacy/explanation. Accessed 31 May 2017.

- Deutsch, Nancy L. “Positionally and the Pen: Reflections on the Process of Becoming a Feminist Researcher and Writer.” Qualitative Inquiry, vol. 10, no. 6, 2004, pp. 885-902.

- Eyman, Douglas. Digital Rhetoric: Theory, Method, Practice. University of Michigan P, 2015.

- Ferrara, Emilio, Onur Varol, Clayton Davis, Filippo Menczer, and Alessandro Flammini. “The Rise of Social Bots.” Communications of the ACM, vol. 59, no. 7, 2016, pp. 96-104.

- Gillespie, Tarleton. “The Relevance of Algorithms.” Media Technologies: Essays on Communication, Materiality, and Society, edited by Tarleton Gillespie, Pablo Boczkowski, and Kirsten Foot, MIT P, 2014, pp. 167-192.

- Guilebeault, Douglas, and Samuel Woolley. “How Twitter Bots are Shaping the Election.” The Atlantic, 1 Nov 2016, https://www.theatlantic.com/technology/archive/2016/11/election-bots/506072/. Accessed 31 May 2017.

- Hayes, Tracey J. “#MyNPYD: Transforming Twitter into a Public Place for Protest.” Computers and Composition, vol. 43, 2017, pp. 118-134.

- Jackson Brian, and Jon Wallin. Rediscovering the ‘Back-and-Forthness’ of Rhetoric in the Age of YouTube. College Composition and Communication, vol. 61, no. 2, 2009, pp. W374-396.

- Kennedy, Krista. Textual Curation: Authorship, Agency, and Technology in Wikipedia and Chambers’s Cyclopædia. University of South Carolina P, 2016.

- Kimme Hea, Amy C. “Riding The Wave: Articulating a Critical Methodology for Web Research Practices.” Digital Writing Research, edited by Heidi A. McKee and Danielle Nicole DeVoss, Hampton P, 2007, pp. 269-286.

- Kirsch, Gesa. Ethical Dilemmas in Feminist Research: The Politics of Location, Interpretation, and Publication. SUNY P, 1999.

- Kirsch, Gesa E., and Joy S. Ritchie. “Beyond the Personal: Theorizing a Politics of Location in Composition Research.” College Composition and Communication, vol. 46, no. 1, 1995, pp. 7-29.

- Kleinsasser, Audrey M. “Researchers, Reflexivity, and Good Data: Writing to Unlearn.” Theory Into Practice, vol. 39, no. 3, pp. 155-162.

- McCosker, Anthony. “Trolling as Provocation: YouTube’s Agonistic Publics.” Convergence: The International Journal of Research into New Media Technologies, vol. 20, no. 2, 2014, pp. 201-217.

- McKee, Heidi A., and James E. Porter. The Ethics of Internet Research: A Rhetorical, Case-based Process. Peter Lang, 2009.

- McVey, James Alexander, and Heather Suzanne Wood. “Anti-Racist Activism and the Transformational Principles of Hashtag Publics: From #HandsUpDontShoot to #PantsUpDontLoot.” Present Tense, vol. 3, no. 5, 2016, http://www.presenttensejournal.org/volume-5/anti-racist-activism-and-the-transformational-principles-of-hashtag-publics-from-handsupdontshoot-to-pantsupdontloot/. Accessed 31 May 2017.

- Mittelstadt, Brent Daniel, Patrick Allo, Mariarosaria Tadeo, Sandra Yachter, and Luciano Floridi. “The Ethics of Algorithms: Mapping the Debate.” Big Data and Society, vol. 3, no. 2, 2016, pp. 1-21.

- Penney, Joel, and Caroline Dadas. “(Re)Tweeting in the Service of protest: Digital Composition and Circulation in the OWS Movement.” New Media & Society, vol. 16, no. 1, 2014, pp. 74-90.

- “Resistbot.” Twitter, https://twitter.com/botresist?lang=en. Accessed 20 Nov 2017.

- Rickly, Rebecca “Messy Contexts: Research as a Rhetorical Situation.” Digital Writing Research, edited by Heidi A. McKee and Danielle Nicole DeVoss, Hampton P, 2007, pp. 377-397.

- Sapienza, Filipp. “Ethos and Research Positionality in Studies of Virtual Communities.” Digital Writing Research, edited by Heidi A. McKee and Danielle Nicole DeVoss, Hampton P, 2007, pp. 89-106.

- Selber, Stuart. Multiliteracies for a Digital Age. SIU P, 2004.

- Selfe, Cynthia L. Technology and Literacy in the 21st Century: The Importance of Paying Attention. SIU P, 1999.

- Selfe, Cynthia L., and Richard J. Selfe. “The Politics of the Interface: Power and its Exercise in Electronic Contact Zones.” College Composition and Communication, vol. 45, no. 4, 1994, pp. 480-504.

- Solberg, Janine. “Googling the Archive: Digital Tools and the Practice of History.” Advances in the History of Rhetoric, vol. 15, no .1, 2012, pp. 53–76.

- “Twitter Privacy Policy” Twitter. 18 June 2017, https://twitter.com/privacy?lang=en. Accessed 20 June 2017.

- Varol, Onur, Emilio Ferrara, Clayton A. Davis, Filippo Menczer, and Alessandro Flammini. “Online Human-Bot Interactions: Detection, Estimation, and Characterization.” AAAI International Conference on Weblogs and Social Media (ICWSM), 2017, https://arxiv.org/pdf/1703.03107.pdf. Accessed 14 Aug 2017.

Cover Image Credit: Author

Leigh Gruwell is an Assistant Professor of English at Auburn University where she teaches graduate and undergraduate courses in writing and rhetoric. Her research focuses on digital and multimodal rhetorics, feminist rhetorics, and research methodologies, and has most recently been published in Composition Forum and Computers and Composition.

Leigh Gruwell is an Assistant Professor of English at Auburn University where she teaches graduate and undergraduate courses in writing and rhetoric. Her research focuses on digital and multimodal rhetorics, feminist rhetorics, and research methodologies, and has most recently been published in Composition Forum and Computers and Composition.